Let's look at all the wonderful things linear dynamics can do in two dimensions.

But what is a linear dynamical system in two dimensions you may ask? There are two forms. The first is the discrete time case, in which we have a sequence formed by mapping the previous step through some linear transformation:

\[ \mathbf{x}_{k+1} = \mathbf{A}\mathbf{x}_k \]

Where \(\mathbf{x}_k \in \mathbb{R}^2\) is a two dimensional vector called the state vector at time \(k\) and \(\mathbf{A} \in \mathbb{R}^{2\times2}\) is a two by two matrix called the transition matrix. We can equivalently write it as a linear system of scalars.

\[ x_{k+1} = ax_k + by_k \] \[ y_{k+1} = cx_k + dy_k \]

Which comes from setting:

\[ \mathbf{x}_k = \begin{bmatrix} x_k \\ y_k \end{bmatrix} \] \[ \mathbf{A} = \begin{bmatrix} a & b \\ c & d \end{bmatrix} \]

Another equivalent formulation gives rise to calling a discrete time dynamical system a kind of difference equation, because we set the difference between successive values to satisfy an equation:

\[ \mathbf{x}_{k+1} - \mathbf{A}\mathbf{x}_k = 0 \]

We can now solve the initial value problem, that is, we can find \(\mathbf{x}_k\) for all \(k\in\mathbb{N}\) if we know a specific value \(\mathbf{x}_0\). Namely, we have that:

\[ \mathbf{x}_k = \mathbf{A}^k \mathbf{x}_0 \]

If \(\mathbf{A}\) is invertible, we can actually go backwards and extend this sequence to work for all \(k\in\mathbb{R}\) by saying that \(\mathbf{A}^{-k} = (\mathbf{A}^{-1})^k\).

Anyways, the second kind of two dimensional dynamical system is a two dimensional system of ordinary differential equations. It comes in the form:

\[ \mathbf{x}' = \mathbf{A}\mathbf{x} \]

Or equivalently as a system (hence the name system of ordinary differential equations):

\[ x' = ax + by \] \[ y' = cx + dy \]

An ODE can be thought of as the continuous time version of a discrete time dynamical system. We can think of an ordinary differential equation as the result of taking more and more steps of a decreasing size. Indeed, this leads us to Euler's method for approximating an ordinary differential equation as a discrete time dynamical system given an initial condition \(x(0)\) up to a time \(t_e\).

\[ \mathbf{y}_{k+1} = \mathbf{y}_k + \frac{1}{n}\mathbf{A}\mathbf{y}_k = (\mathbf{I} + \frac{1}{n}\mathbf{A})\mathbf{y}_k \] \[ \mathbf{y}_0 = \mathbf{x}(0) \] \[ \mathbf{x}(t) \approx \hat{\mathbf{x}}(t) \mathbf{y}_{\frac{nt}{t_e}} = (\mathbf{I} + \frac{1}{n}\mathbf{A})^{\frac{nt}{t_e}} \mathbf{x}(0) \]

The approximation error decays linearly (\(\mathbf{x}(t) - \hat{\mathbf{x}}(t) = \Omega(\frac{1}{n})\)) as \(n\) goes off to infinity. Euler's method is actually way more general than this, but that's a story for another time.

In the linear case, especially in two dimensions, we can actually do a lot better at solving the system than just using Euler's method (technically, you can do better even for nonlinear ODEs using implict methods and higher order Runge-Kutta methods, but that's besides the point). We can actually find a nice and simple closed form of the solution in every case!

First, let's think about what a solution for a system of ordinary differential equations looks like in general. Consider a locally Lipschitz continuous (a weaker condition than continuously differentiable, and a condition our linear equations nock out of the park) function \(f: \mathbb{R}^2 \to \mathbb{R}^2\). We can make a system of ordinary differential equations by setting \(\mathbf{x}' = f(\mathbf{x})\). By the Picard-Lindelöf Theorem (which is why we need local Lipschitz continuity), this system has a unique solution given \(\mathbf{x}(0)\). Let's plug all of our solutions for the various initial conditions into a function called a flow.

\[ \phi : \mathbb{R} \times \mathbb{R}^2 \to \mathbb{R}^2 \] \[ \phi_0(\mathbf{x}) = \mathbf{x} \] \[ \frac{d}{dt}\phi_t(\mathbf{x}) = f(\phi_t(\mathbf{x})) \]

A flow actually has some nice properties with regards to composition. Because the solutions are unique and \(f\) doesn't depend on \(t\), feeding the output of the flow back into the flow is just advancing time even more.

\[ \phi_t(\phi_s(\mathbf{x})) = \phi_{t+s}(\mathbf{x})

This property is somewhat reminiscint of the properties of exponentials. Indeed, the exponential function multiplied by a constant looks a lot like a flow. Recall that

\[ e^0x = x \] \[ \frac{d}{dt}e^tx = e^tx \] \[ e^se^tx = e^{s+t}x \]

The reason \(xe^t\) looks like a flow is because it is a flow! Specifically, it's the flow of the linear one dimensional ODE \(x' = x\). Because flows are unique, this is actually a way you can define \(e^t\). In my opinion, this, together with \(\ln{t} = \int_1^t \frac{1}{s} ds\) is the nicest way to define exponentiation by non-integer powers (sadly we need to use Taylor series if we want to actually calculate the flow, so we need integer powers defined elsewhere). Armed with this, we can easily enough find the flow of any one dimensional linear ODE.

\[ x' = ax \] \[ x(t) = e^{at}x(0) \]

We just raise \(e\), the fundamental base of all exponential functions, to the power of the coefficient in our derivative times the time, and then multiply by our initial condition.

"This is really simple. It would be nice if we could do this to our two dimensional case, but that would require raising \(e\) to the power of the matrix \(\mathbf{A}t\), and we can't raise \(e\) to a matrix," you might say. The thing is, this is mathematics. We know that our flow exists, so we can just define this flow to be \(\phi_t(\mathbf{x}) = e^{\mathbf{A}t}\mathbf{x}\) and then just make up \(e^{\mathbf{A}t}\) to have all the properties we need. Nothing's stopping us.

Technically, we can't get by with just the existance of the flow; we also need to show that when we scale \(\mathbf{A}\) by \(c\) in the differential equation, that's the same thing as scaling \(t\) by \(c\) in the flow. Here's a quick proof:

Suppose we have the flow for a linear system of ODEs: \[ \frac{d}{dt}\phi_t(\mathbf{x}) = \mathbf{A}\mathbf{x} \] Now consider: \[ \frac{d}{dt}\phi_{ct}(\mathbf{x}) = (\frac{d}{dt} ct)(\frac{d}{dct}\phi_{ct}(\mathbf{x})) = c\mathbf{A}\mathbf{x} \] So scaling the time in the flow is equal to scaling the transition matrix. QED.

To figure out what our new function, the matrix exponential, looks like, let's first look at what the regular scalar exponential looks like. It's power series, which converges and is an equivalent definition for \(e^t\) is given by:

\[ e^t = \sum_{i=0}^\infty \frac{t^i}{i!} \]

If we differentiate with respect to time, we drop the power by one (an \(\Theta(t^i)\) term becomes \(\Theta(t^{i-1})\)), and the coefficient from the power rule cancel out the biggest term in the factorial. All together, the term \(\frac{t^i}{i!}\) gets turned into \(\frac{t^{i-1}}{(i-1)!}\) — it gets moved up one. We move each of the infinitely many terms up one and are left with the same thing as we started with.

Now let's put the matrix in there.

\[ \frac{d}{dt} \sum_{i=0}^\infty \frac{(\mathbf{A}t)^i}{i!} = \frac{d}{dt}\sum_{i=0}^\infty \frac{\mathbf{A}^it^i}{i!} = \sum_{i=1}^\infty \frac{\mathbf{A}^it^{i-1}}{(i-1)!} = \sum_{i=1}^\infty \frac{\mathbf{A}(\mathbf{A}t)^{i-1}}{(i-1)!} = \sum_{i=0}^\infty \frac{\mathbf{A}(\mathbf{A}t)^i}{i!} = \mathbf{A}\sum_{i=0}^\infty \frac{(\mathbf{A}t)^i}{i!} \]

Just like with \(e^{at}\), the \(\mathbf{A}\) just popped out when we differentiated. This power series is the matrix exponential we need.

With this equivalent definition, we can proove a nice property that will be helpful in about 7 or so paragraphs: when \(\mathbf{A}\) and \(\mathbf{B}\) commute (\mathbf{AB}=\mathbf{BA}\),\ they act enough like regular scalars that the matrix exponential works like the regular exponential with regards to their sum: \(e^{\mathbf{A}+\mathbf{B}} = e^\mathbf{A}e^\mathbf{B}\).

Take the power series definition: \[ e^{\mathbf{A}+\mathbf{B}} = \sum_{k=0}^\infty \frac{(\mathbf{A}+\mathbf{B})^k}{k!} \] Because the matrices commute (so strings of multiplications are equal if they have the same number of \(\mathbf{A}\)s and \(\mathbf{B}\)s), we can use the binomial theorem: \[ e^{\mathbf{A}+\mathbf{B}} = \sum_{k=0}^\infty\sum_{i=0}^k \frac{\frac{k!}{i!(k-i)!}\mathbf{A}^i\mathbf{B}^{k-i}}{k!} = \sum_{k=0}^\infty\sum_{i=0}^k (\frac{\mathbf{A}^i}{i!})(\frac{\mathbf{B}^{k-i}}{(k-i)!}) \] For any \(i,j\in\mathbb{N}\cup\{0\}\), there is exactly one solution \(k\in\mathbb{N}\cup\{0\}\) to \(j=k-i\) (namely \(k=j+i\)). This is exactly the same as the number of ways we can get the product inside the summationto have a certain power \(i\) on \(\mathbf{A}\) and \(j\) on \(\mathbf{B}\). As such, we can change the variables: \[ e^{\mathbf{A}+\mathbf{B}} = \sum_{i=0}^\infty\sum_{j=0}^\infty (\frac{\mathbf{A}^i}{i!})(\frac{\mathbf{B}^j}{j!}) \] Now it's easy to split the series and get the needed result. \[ e^{\mathbf{A}+\mathbf{B}} = (\sum_{i=0}^\infty\frac{\mathbf{A}^i}{i!})(\sum_{j=0}^\infty\frac{\mathbf{B}^j}{j!}) = e^\mathbf{A} e^\mathbf{B} \] QED.

In order to see more of how the matrix exponential acts, we need to look at the individual terms. Namely, we need to look at the \(\mathbf{A}^i\) parts.

One very powerful result in linear algebra is that we can write an arbitrary (square) matrix \(\mathbf{A}\) as the product of complex matrices \(\mathbf{A} = \mathbf{V}\mathbf{\Lambda}\mathbf{V}^{-1} such that either \(\mathbf{\Lambda}\) is diagonal or, below the diagonal, there are zeros, two units above the diagonal, there are zeros, directly above the diagonal, there are zeros and ones, and if the entry \(\Lambda_{i,i+1} = 1\) then \(\Lambda_{i,i} = \Lambda_{i+1,i+1}\). That is, the matrix \(\mathbf{\Lambda}\) is in Jordan normal form. If \(\mathbf{\Lambda}\) isn't diagonal, then the matrix \(\mathbf{A}\) is called defective because it makes a lot of stuff, including matrix exponentials and other stuff we'll do later, harder. For reasons that will be clear later, we'll call the diagonal entries \(\lambda_i / \Lambda_{i,i}\). We can now describe all possible two by two \(\mathbf{\Lambda}\)s:

\[ \text{diagonal:} \; \begin{bmatrix}\lambda_1&0\\0&\lambda_2\end{bmatrix} \quad \text{defective:} \; \begin{bmatrix}\lambda&1\\\lambda&0\end{bmatrix} \]

The restriction to two dimensions drastically simplifies the possible \(\mathbf{\Lambda}\)s.

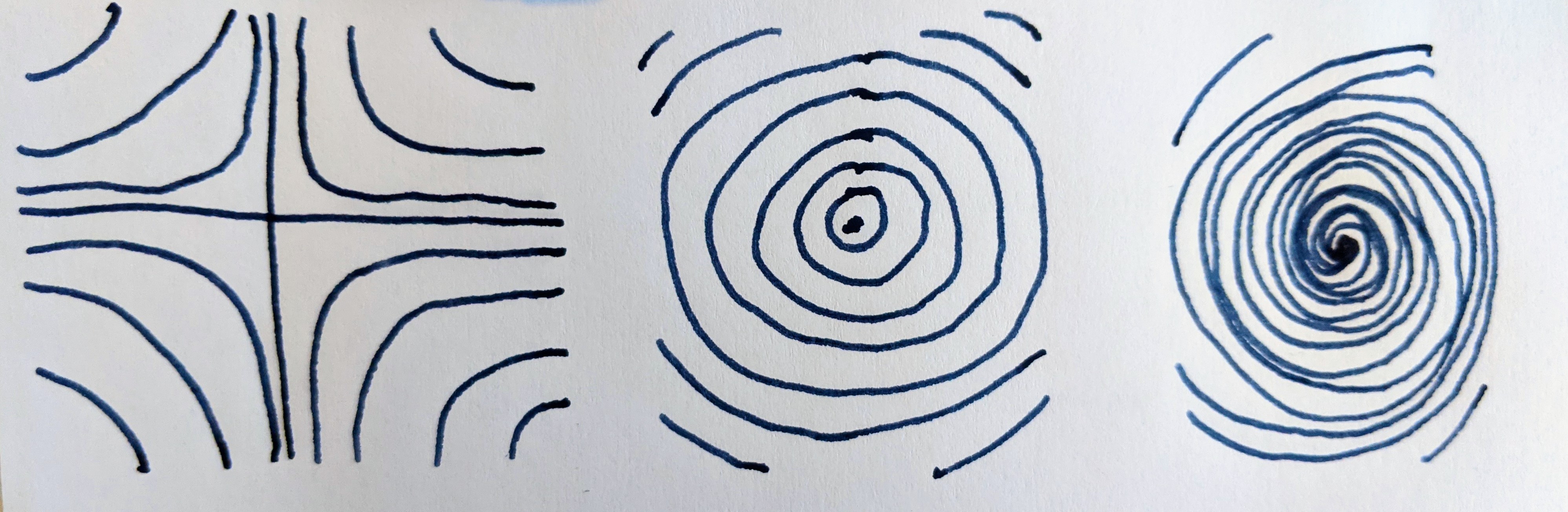

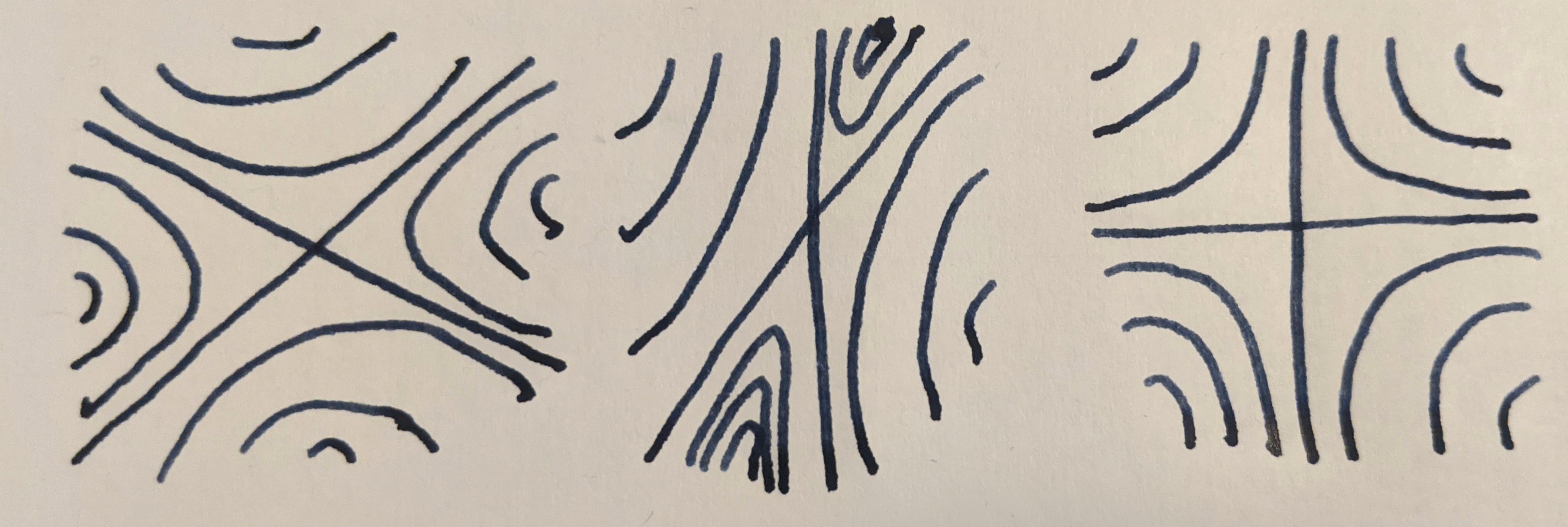

The other relevant matrix is \(\mathbf{V}\), which is invertible and sandwiches \(\mathbf{\Lambda}\). This means \(\mathbf{V}\) is a change of basis matrix which converts the standard basis of \(\mathbb{R}^2\) into a basis where the linear transformation of \(\mathbf{A}\) is in Jordan normal form (specifically, it is \(\mathbf{\Lambda}\)). This means that all behavior (namely orbits and flows) a linear dynamical system can have is just some skewing and rotating of the behavior on a Jordan normal form transition matrix.

The cool thing about \(\mathbf{A} = \mathbf{V}\mathbf{\Lambda}\mathbf{V}^{-1}\) is that it ends with the inverse of the matrix that it starts with. When you raise \(\mathbf{A}^k\), the \(\mathbf{V}^{-1}\)s and \(\mathbf{V}\)s on the inside cancel out, and you end up with these amazing equations:

\[ \mathbf{A}^k = (\mathbf{V}\mathbf{\Lambda}\mathbf{V}^{-1})^k = \mathbf{V}\mathbf{\Lambda}^k\mathbf{V}^{-1} \] \[ e^{\mathbf{A}} = \sum\frac{\mathbf{A}^i}{i!} = \sum\frac{\mathbf{V}\mathbf{\Lambda}^i\mathbf{V}^{-1}}{i!} = \mathbf{V}\sum\frac{\mathbf{\Lambda}}{i!}\mathbf{V}^{-1} = \mathbf{V}e^\mathbf{\Lambda}\mathbf{V}^{-1} \]

This means we can just find the powers and matrix exponential of the Jordan normal form matrix \(\mathbf{\Lambda}\), which has a nice and easy general closed form for both of our cases.

\[ \begin{bmatrix}\lambda_1&0\\0&\lambda_2\end{bmatrix}^k = \begin{bmatrix}\lambda_1^k&0\\0&\lambda_2^k\end{bmatrix} \quad\quad\quad\quad e^{\begin{bmatrix}\lambda_1&0\\0&\lambda_2\end{bmatrix}} = \begin{bmatrix}e^\lambda_1&0\\0& e^\lambda_2\end{bmatrix} \] \[ \begin{bmatrix}\lambda&1\\0&\lambda\end{bmatrix}^k = \begin{bmatrix}\lambda^k& k\lambda^{k-1}\\0&\lambda^k\end{bmatrix} \quad\quad\quad\quad e^{\begin{bmatrix}\lambda&1\\0&\lambda\end{bmatrix}} = \begin{bmatrix}e^\lambda& e^\lambda\\0& e^\lambda\end{bmatrix} \]

The diagonalisable case is simple enough, but the defective case is a bit harder. You can prove the power easily enough by straightforeward induction, but the defective matrix exponential is instead best dealt with by splitting the diagonal from the one above the diagonal (called the nilpotent part).

We'll actually show a slight generalisation which will be helpful later. \[ e^\mathbf{\Lambda} e^{\begin{bmatrix}\lambda&1\\0&\lambda\end{bmatrix}t} = \begin{bmatrix}e^{\lambda t}& te^{\lambda t}\\0& e^{\lambda t}\end{bmatrix} \] We can split the matrix \(\mathbf{\Lambda}t\) into \(\mathbf{\Lambda} = \mathbf{D}t+\mathbf{N}t\) where \(\mathbf{D}\) is diagonal and \(\mathbf{N}^2=\mathbf{0}\) is nilpotent. Specifically, \[ \mathbf{D} = \begin{bmatrix}\lambda&0\\0&\lambda\end{bmatrix} \quad \mathbf{N} = \begin{bmatrix}0&1\\0&0\end{bmatrix} \] These two parts commute: \[ \mathbf{D}\mathbf{N} = \begin{bmatrix}0&\lambda\\0&0\end{bmatrix} = \mathbf{N}\mathbf{D} \] Because of this we can use the property we prooved earlier, \[ e^{(\mathbf{D}+\mathbf{N})t} = e^\mathbf{Dt}e^\mathbf{Nt} \] The diagonal matrix exponential is easy enough. For the nilpotent part, because it becomes zero when raised to a big enough power, the Taylor series of its matrix exponential has no terms past the linear one, and is the finite polynomial: \[ e^{\mathbf{N}t} = \frac{(\mathbf{N}t)^0}{0!} + \frac{(\mathbf{N}t)^1}{1!} = \mathbf{I} + \mathbf{N}t = \begin{bmatrix}1& t\\0&1\end{bmatrix} \] We can now multiply out the full matrix exponential: \[ e^{(\mathbf{D}+\mathbf{N})t} = \begin{bmatrix}e^{\lambda t}&0\\0& e^{\lambda t}\end{bmatrix} \begin{bmatrix}1& t\\0&1\end{bmatrix} = \begin{bmatrix}e^{\lambda t}& te^{\lambda t}\\0& e^{\lambda t}\end{bmatrix} \] QED.

Before we move on, let me make an important connection to a big concept in linear algebra. Let's split up our matrix \(\mathbf{V}\) into column vectors.

\[ \mathbf{V} = \begin{bmatrix}\mathbf{v}_1 & \mathbf{v}_2\end{bmatrix} \]

Let's see what happens when we multiply these with our matrix \(\mathbf{A}\) in the diagonalisable and defective case:

\[ \mathbf{A}\mathbf{v}_1 = \mathbf{V}\begin{bmatrix}\lambda_1&0\\0&\lambda_2\end{bmatrix}\mathbf{V}^{-1}\mathbf{v}_1 = \mathbf{V}\begin{bmatrix}\lambda_1&0\\0&\lambda_2\end{bmatrix}\mathbf{e}_1 = \mathbf{V}\lambda_1\mathbf{e}_1 = \lambda_1\mathbf{v}_1 \] \[ \mathbf{A}\mathbf{v}_2 = \mathbf{V}\begin{bmatrix}\lambda_1&0\\0&\lambda_2\end{bmatrix}\mathbf{V}^{-1}\mathbf{v}_2 = \mathbf{V}\begin{bmatrix}\lambda_1&0\\0&\lambda_2\end{bmatrix}\mathbf{e}_2 = \mathbf{V}\lambda_2\mathbf{e}_2 = \lambda_1\mathbf{v}_2 \] \[ \mathbf{A}\mathbf{v}_1 = \mathbf{V}\begin{bmatrix}\lambda&1\\0&\lambda\end{bmatrix}\mathbf{V}^{-1}\mathbf{v}_1 = \mathbf{V}\begin{bmatrix}\lambda&1\\0&\lambda\end{bmatrix}\mathbf{e}_1 = \mathbf{V}\lambda\mathbf{e}_1 = \lambda\mathbf{v}_1 \] \[ \mathbf{A}\mathbf{v}_1 = \mathbf{V}\begin{bmatrix}\lambda&1\\0&\lambda\end{bmatrix}\mathbf{V}^{-1}\mathbf{v}_2 = \mathbf{V}\begin{bmatrix}\lambda&1\\0&\lambda\end{bmatrix}\mathbf{e}_2 = \mathbf{V}(\mathbf{e}_1+\lambda\mathbf{e}_2) = \mathbf{v}_1+\lambda\mathbf{v}_2 \]

The columns of \(\mathbf{V}\) are eigenvectors, and the \(\lambda\)s are eigenvalues! Well, all columns aside from \(\mathbf{v}_2\) in the defective case, but it acts kinda like it in some ways, so it is a generalised eigenvector.

Armed with this, we can look at what dynamics do.

Here's a little demo which shows off 2d discrete time linear dynamics — dynamics of the form: \[ \mathbf{x}_{k+1} = \begin{bmatrix}a& b\\c& d\end{bmatrix}\mathbf{x}_k \]

Here's how you can make bold and italic text.

Here's how you can add an image:

Here's how to make a list:

To learn more HTML/CSS, check out these tutorials!